LangChain Building Blocks: Tools, Templates & Memory Walkthrough

Part 3 of LangChain Mastery

5/28/2025, Time spent: 0m 0s

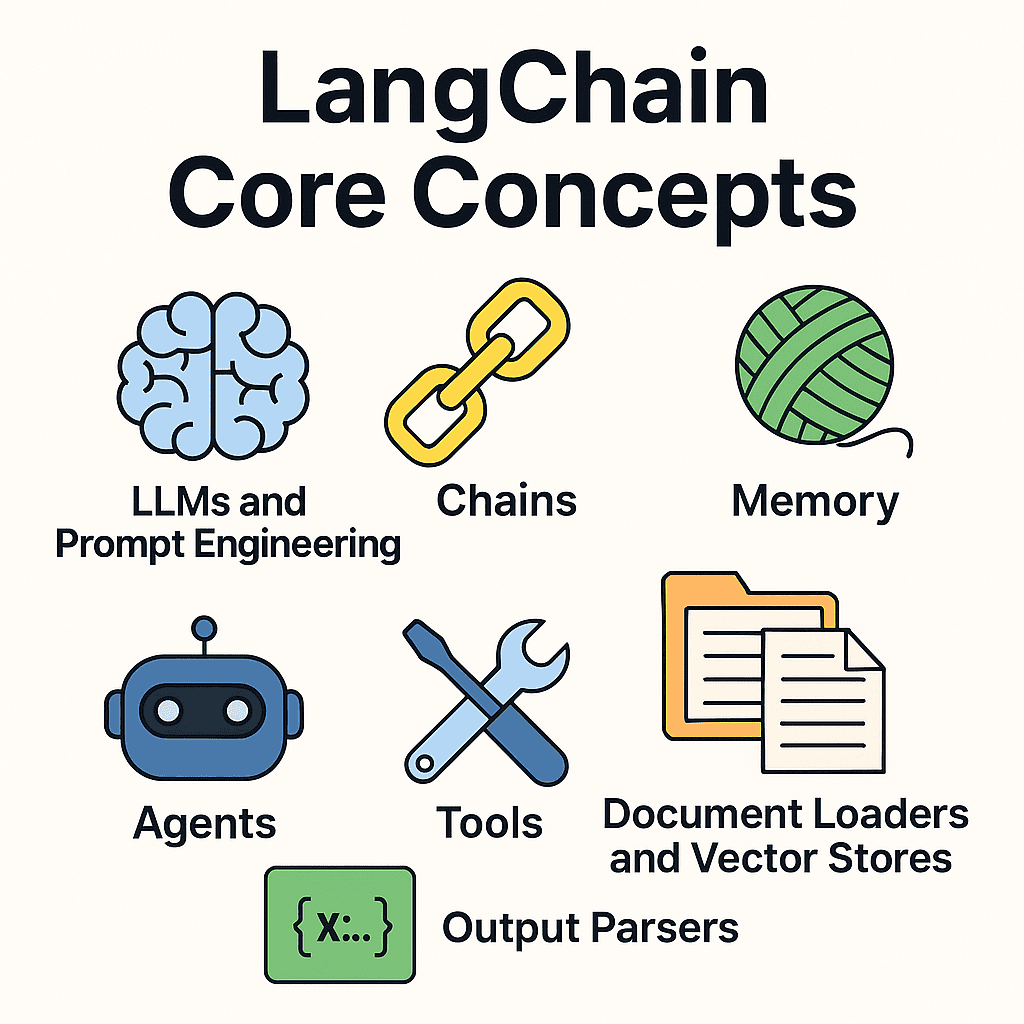

In the previous module, we explored the core concepts of LangChain — what chains, agents, tools, and memory are and why they matter. Now it’s time to connect those pieces and walk through how to use them together to build a context-aware, multi-functional LLM application.

This module focuses on practical integration:

- 🧠 Prompt templates + LLM + memory = intelligent chatbot

- 🔧 Tools + chains = agents with real-world capabilities

- 📦 Document loaders + vector stores = RAG-powered search

Each section below includes guidance, tips, and 🛠️ Try This blocks so you can build while you learn.

🧠 LLMs and Prompt Engineering

Large Language Models (LLMs) like GPT, Claude, or LLaMA are the brains behind LangChain apps. LangChain makes it easy to craft effective prompts using:

PromptTemplate: inject variables dynamically- Few-shot examples for better completions

- Reusability across multiple chains

Example:

from langchain.prompts import PromptTemplate

prompt = PromptTemplate.from_template("Explain {concept} in simple terms.")

print(prompt.format(concept="quantum computing"))🔗 ✍️ Hands-On: LangChain Prompt Templates

🔁 Chains

Chains are the core LangChain abstraction for combining LLM calls, prompts, and external tools into workflows.

🧱 Common Chain Types:

- LLMChain: a basic prompt → LLM → output flow

- SequentialChain: passes output of one chain into the next

- RouterChain: routes inputs to different chains dynamically

Think of chains like pipelines — where each block transforms or enriches the response before moving on.

Hand-on Langchain Chains

🧵 Memory

LLMs are stateless — they forget past messages. LangChain provides memory modules to maintain context across turns.

🔍 Types of Memory:

- ConversationBufferMemory: retains entire chat history

- ConversationSummaryMemory: summarizes conversation dynamically

- ConversationBufferWindowMemory: limited window for performance

Add memory to chains or agents to make your apps feel intelligent and consistent.

🔗 Hands-On: LangChain Memory

🤖 Agents

Agents use LLMs to reason and decide what tools to use at runtime.

Unlike chains (which are static), agents dynamically choose actions using a thought → tool → action loop.

🔄 Popular Agent Types:

- ReAct Agent: follows a Reasoning + Acting framework

- Tool-Using Agents: plan multi-step tool executions

- ZeroShotAgent: makes decisions without examples

Agents are great for building autonomous assistants or decision engines.

🔗 Hands-On: LangChain Agents

🛠️ Tools

Tools allow your LLM to interact with the outside world — just like plugging apps into a smartphone.

Built-in tools include:

- SerpAPI: for search

- Wikipedia: for factual data

- PythonREPLTool: run Python code

- RequestsTool: call any API

You can define your own tools to call internal APIs, databases, or cloud services.

🔗 Hands-On: LangChain Tools

📄 Document Loaders and Vector Stores

LangChain excels at Retrieval-Augmented Generation (RAG) using vector databases.

🔍 Loaders:

- PDFs, web pages, CSVs, Notion, YouTube, etc.

- Split content into chunks using TextSplitter

🧠 Vector Stores:

- FAISS, Chroma, Pinecone, Weaviate

- Used for fast similarity search during retrieval

This is the foundation for Q&A apps, semantic search, and AI over documents. Document Loaders and Vector Stores

🧾 Output Parsers

LLMs can return unstructured text — LangChain helps you parse results cleanly.

- StrOutputParser: plain text

- CommaSeparatedListOutputParser: list of values

- PydanticOutputParser: validate output structure

- Custom JSON formatters for structured responses

Parsing helps you bridge LLM outputs with other code components reliably.

Read More Output Parser in Langchain

🏗️ LangChain Building Blocks - Recap

LangChain gives you the LEGO bricks to build powerful AI systems:

- LLM: Language models that power LangChain applications, enabling text generation and understanding through cloud-based or local integrations.

- Prompt Engineering: Crafting reusable prompt templates with dynamic inputs to guide LLMs for consistent, task-specific outputs.

- Chains: Sequences of components (e.g., prompt, LLM, tools) combined to create structured workflows for complex tasks.

- Memory: Modules like ConversationBufferMemory that store and recall conversation history for context-aware interactions.

- Agents: Autonomous systems that use LLMs and tools to make decisions and perform tasks based on user input.

- Tools: Utilities (e.g., Google Search, Python REPL) that agents leverage to interact with external systems or perform specific functions.

- Document Loaders and Vector Stores: Tools for loading documents and indexing them in vector stores (e.g., FAISS) for efficient retrieval.

- Output Parser: Mechanisms like Pydantic or JSON parsers to structure and validate LLM outputs for downstream use.

Each one adds structure, reasoning, or real-world interaction to your LLM application.

Next: We’ll put these concepts into action by building real apps with LangChain — including chatbots, document-based Q&A, and agent workflows.