Introduction to LangChain

Part 2 of LangChain Mastery

5/27/2025, Time spent: 0m 0s

LangChain is an open-source framework that simplifies the process of building applications powered by large language models (LLMs).

In this module, you’ll learn:

- What LangChain is and how it differs from directly calling an LLM API

- Key use cases such as chatbots, retrieval-augmented generation (RAG), and smart assistants

- Why modularity, memory, and integration features make LangChain the ideal choice for building real-world AI tools

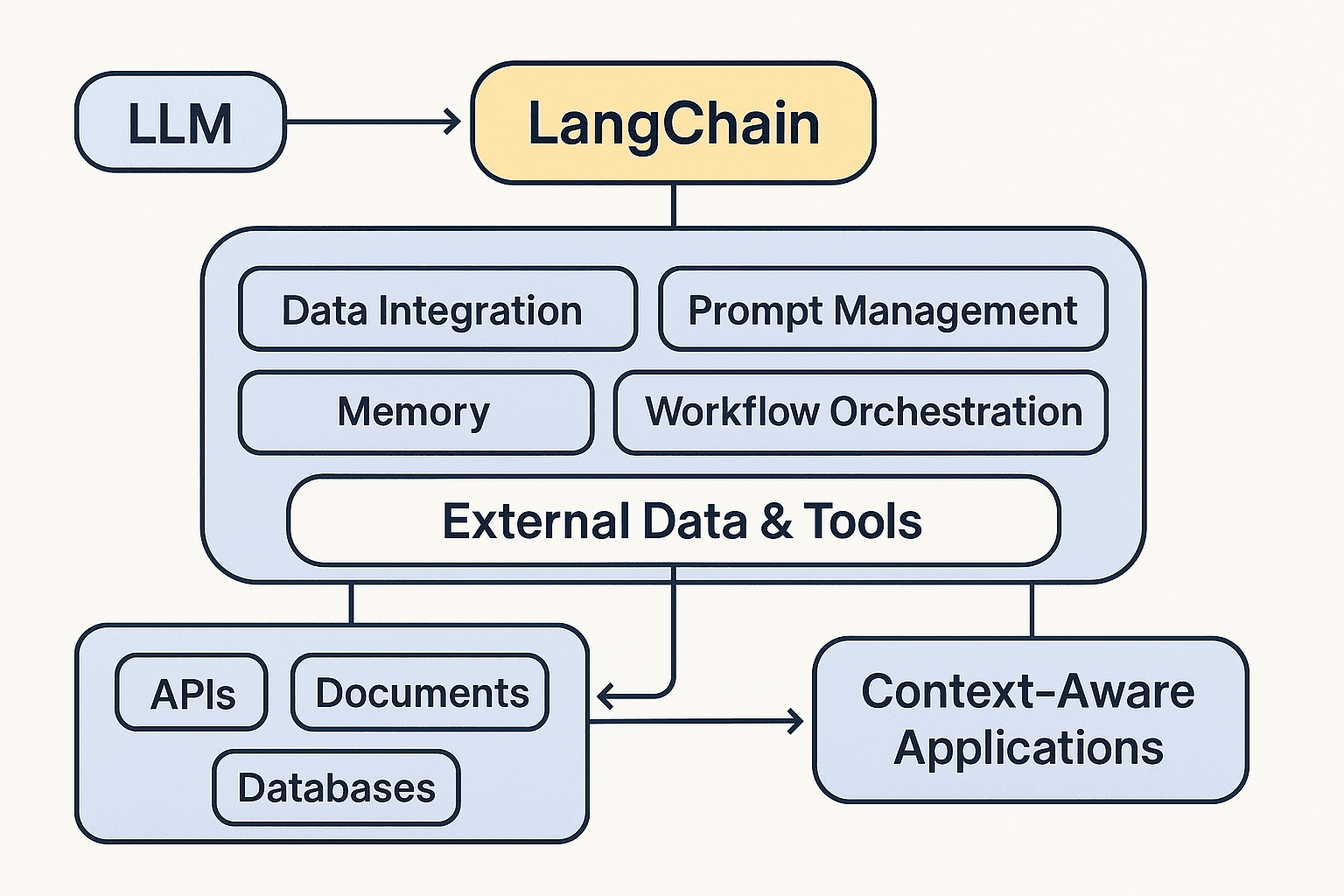

LangChain acts as the connective tissue between powerful language models and the real world. Instead of writing brittle one-off prompts or manually wiring APIs, LangChain offers a structured framework that handles prompt management, memory, external data integration, and multi-step logic.

The diagram below illustrates how LangChain transforms raw LLM outputs into context-aware applications by orchestrating tools, databases, APIs, and workflows under one consistent abstraction layer.

🚀 What is LangChain?

LangChain provides essential abstractions for:

- Connecting to LLMs (like OpenAI, Claude, or local models)

- Managing prompts and dynamic inputs

- Integrating external tools, APIs, and memory

- Building chains and agents for advanced workflows

It acts as a “framework layer” over LLM APIs, helping you create context-aware, tool-integrated applications with less boilerplate.

🆚 Why LangChain Over Raw LLM APIs?

| Feature | OpenAI API Direct | LangChain |

|---|---|---|

| Prompt Templates | ❌ Manual | ✅ Built-in |

| Conversation Memory | ❌ Manual | ✅ Easy modules |

| Agents and Tools | ❌ Custom Code | ✅ Ready to use |

| Document Search (RAG) | ❌ Not native | ✅ Supported |

| Deployment | ❌ Manual | ✅ LangServe, LangGraph |

LangChain makes real-world AI app development modular, extensible, and production-ready from day one.

In the next module, we’ll explore the core building blocks of LangChain: Chains, Agents, Memory, and Tools — and how they interact to power intelligent systems.