Kick start - Langchain

Part 1 of LangChain Mastery

5/27/2025, Time spent: 0m 0s

📘 Introduction

LangChain is the missing layer between large language models (LLMs) and real-world applications. Whether you’re building a chatbot, a RAG-powered knowledge base, or an intelligent agent — LangChain gives you the tools to do it fast, flexibly, and at scale.

In this series, you’ll learn LangChain from the ground up — starting with what it is, why it’s useful, and how to get your first project running in minutes.

🚀 What You’ll Learn in This Module

- What LangChain is and where it fits in the LLM stack

- Real-world use cases LangChain simplifies

- Key benefits over using raw LLM APIs

- How to install and configure LangChain for your projects

🤖 What is LangChain?

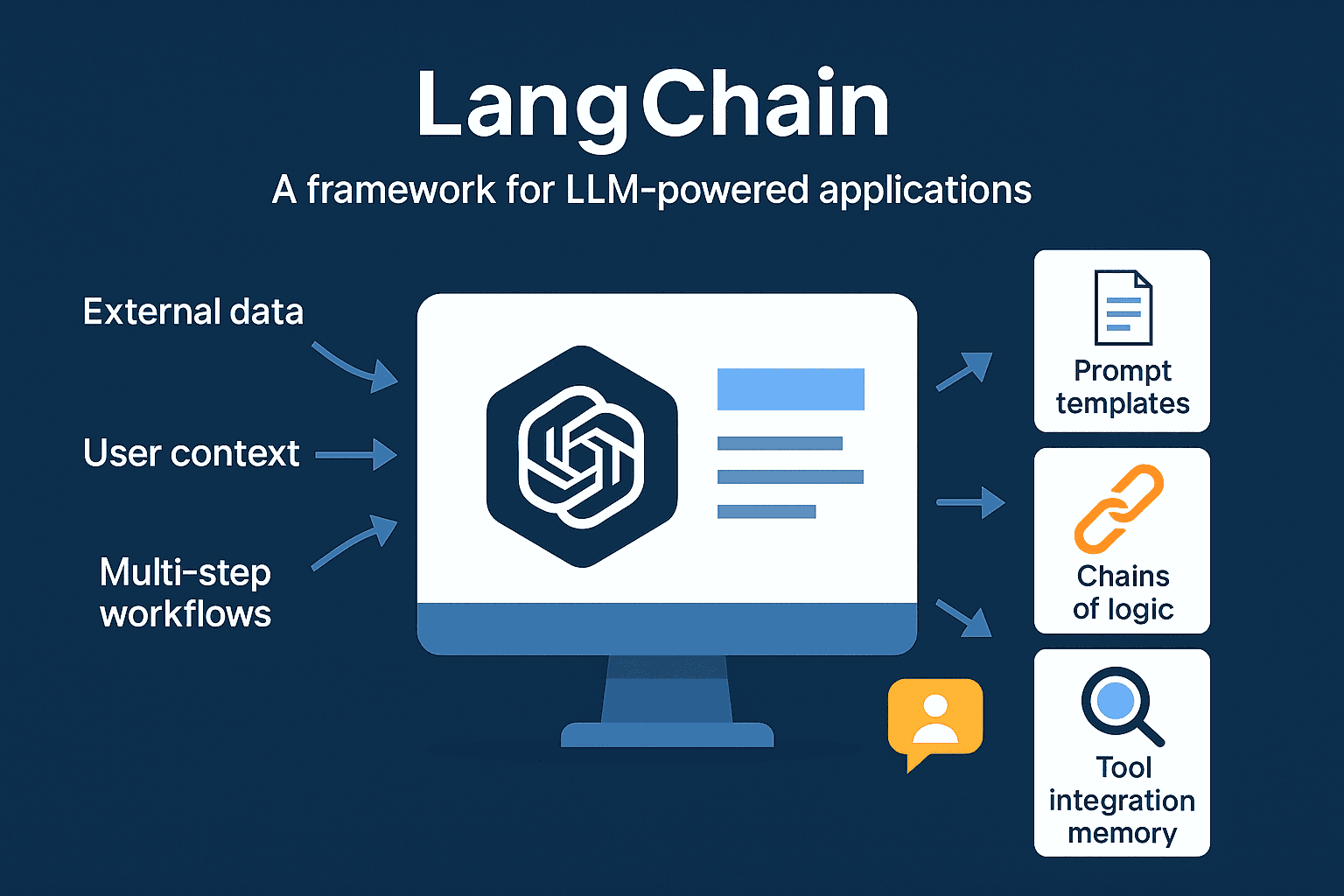

LangChain is a framework for building applications with LLMs that require interaction with:

- External data (APIs, files, databases)

- User memory and context

- Multi-step workflows

- Tool-augmented reasoning

Instead of handling prompts and responses manually, LangChain provides abstractions for:

- Prompt templates

- Chains of logic

- Conversational memory

- Tool integration (e.g., Google Search, SQL)

🧠 Core Use Cases

- Conversational agents (chatbots with memory)

- RAG pipelines (retrieve + generate)

- Assistants for coding, research, finance, etc.

🔍 Why Use LangChain?

LangChain solves many of the limitations of direct LLM usage:

| Feature | Direct LLM (e.g. OpenAI API) | LangChain |

|---|---|---|

| Prompt templating | Manual string formatting | Reusable, structured templates |

| Memory (conversations) | None | Built-in memory modules |

| Workflow composition | Manual | Chains + Agents |

| Tool use (APIs, search) | Manual integration | Plug-and-play Tools |

| Retrieval-augmented Gen | Build from scratch | Native support via retrievers |

LangChain is like the Express.js or Django for LLM-based apps.

⚙️ Installation and Setup

Let’s get your environment ready.

1. Install LangChain

pip install langchain openaiNote: You’ll also need an OpenAI API key. Sign up at platform.openai.com

2. Set Environment Variables

If Macbook used

export OPENAI_API_KEY="your-api-key-here"3. Minimal Setup

from langchain.chat_models import ChatOpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

llm = ChatOpenAI()

prompt = PromptTemplate.from_template("Explain {concept} like I'm 5")

chain = LLMChain(llm=llm, prompt=prompt)

print(chain.run("black holes"))This is your “Hello World” with LangChain. In 5 lines, you built a smart explainer bot.

What’s Next?

In the next module, you’ll explore the core building blocks of LangChain:

- Chains

- Memory

- Agents

- Tools

- Document Loaders

- Vector Stores

Let’s go from simple prompts to full workflows — with real-world, hands-on code.