LangChain Emerging Trends

Part 11 of LangChain Mastery

5/27/2025, Time spent: 0m 0s

As LangChain matures into one of the most versatile frameworks for LLM-based applications, new frontiers are emerging that push its capabilities beyond traditional text prompts and chat interfaces. In this final (optional but essential) part of the LangChain Mastery series, we look at what’s ahead.

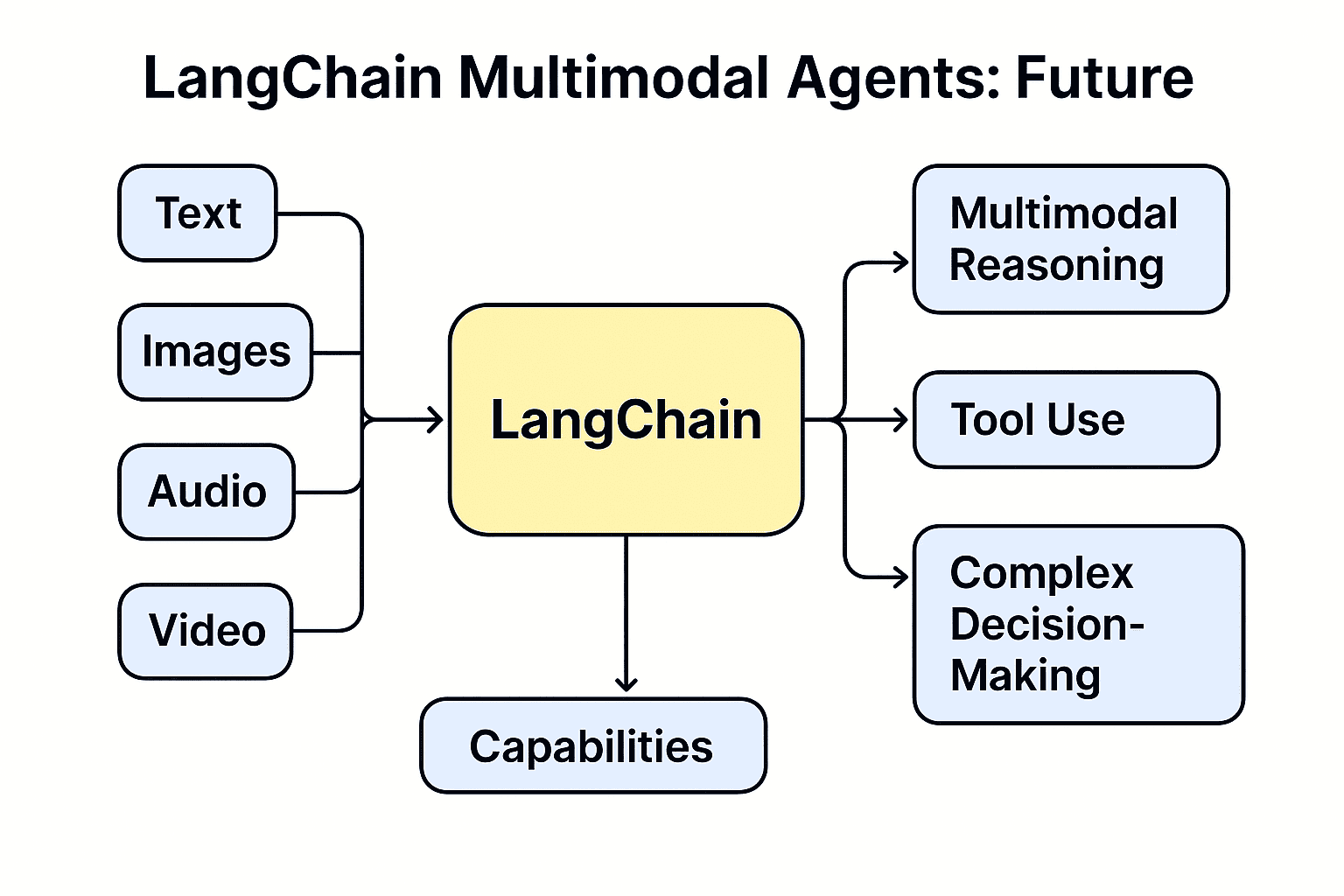

🎨 1. LangChain + Multimodal LLMs

Multimodal models such as GPT-4o, LLaVA, and Claude are changing the game by allowing inputs beyond just text—images, audio, and even video.

🔍 What’s possible?

- Image captioning, OCR-based Q&A systems.

- LLMs that reason over diagrams, dashboards, and photos.

- Using LangChain’s

MultiModalRetrieverand adapters to blend modalities.

💡 LangChain is already experimenting with Vision + Language pipelines. Watch for native integration with LLaVA and Gemini APIs soon.

🧠 2. LangChain and Autonomous Agents

The rise of agentic AI is reshaping how tasks are executed. LangChain’s AgentExecutor + planning tools allow for:

- Autonomous goal decomposition.

- Tool invocation chaining (e.g., Search → Summarize → Act).

- Early integration with LangGraph and Task Execution Trees (TETs).

🔧 Example Use Cases

- Research agents that read + report from the web.

- Financial bots that monitor stocks and update dashboards.

- Workflow automation without traditional scripting.

🔧 Tip: Try combining LangChain Agents with open-source toolkits like CrewAI and AutoGen for parallel task routing.

🔮 3. Future of LangChain: What’s Coming

LangChain’s roadmap hints at major features and shifts:

- LangSmith-native debugging for tracing large workflows.

- Deeper LangGraph integration (state machines for AI agents).

- Improved plugin systems and tool composition.

- Automatic RAG optimization and vector routing strategies.

📣 LangChain is evolving from a prompt-chain builder to a modular agentic AI platform — suited for production-scale apps.

📘 Try This Exploration)

from langchain.agents import initialize_agent, load_tools

from langchain.llms import OpenAI

tools = load_tools(["serpapi", "llm-math"], llm=OpenAI())

agent = initialize_agent(tools, OpenAI(), agent="zero-shot-react-description", verbose=True)

agent.run("What are some use cases of multimodal LLMs and what's the market impact?")Related Reading & Links

- LangChain Agents and Toolkits

- LangGraph – Declarative Agent Framework

- OpenAI GPT-4o Multimodal Capabilities

- LangSmith Tracing + Debugging

🧭 TL;DR

LangChain is not just catching up with AI trends — it’s leading them. From multimodal pipelines to self-directed agents and future-proof integrations, the next chapter of LangChain promises even more powerful, personalized, and production-ready AI.

🛠️ Next: Lets explore Challenges and Limitations for langchain