LangChain Integrations and Ecosystem

Part 8 of LangChain Mastery

5/27/2025, Time spent: 0m 0s

LangChain’s ecosystem consists of several core components and tools that work together to streamline the development, testing, deployment, and monitoring of LLM-powered applications. These include:

🔗 API Integrations

- Google Search, Zapier, Wolfram Alpha, Weather APIs

🧠 Vector DB Support

- FAISS

- Chroma

- Pinecone

- Weaviate

- Qdrant

🤖 LLM Integrations

- OpenAI,

- Anthropic

- Mistral

- Ollama

🧰 UI & Frameworks

- Streamlit

- Gradio

- FastAPI

🧩 Ecosystem Tools

-

LangChain Core Framework: A Python and JavaScript framework for building LLM applications, offering modular components to chain LLMs with external data, tools, and memory.

- Key features include LangChain Expression Language (LCEL) for composing workflows, support for - Retrieval-Augmented Generation (RAG), and agent-based architectures.

- Use cases: Chatbots, document summarization, code analysis, and synthetic data generation.

-

LangSmith: A framework-agnostic platform for debugging, testing, evaluating, and monitoring LLM applications. Provides observability with prompt-level visibility, LLM-as-a-judge evaluators, and tools to optimize performance and reduce costs. Useful for tracking agent interactions and migrating between LLMs based on cost, performance, or latency.

-

LangGraph: A framework for building stateful, scalable AI agents using a graph-based approach. Supports complex workflows, human-in-the-loop interactions, and streaming-native deployments. Widely adopted by companies like LinkedIn, Uber, and GitLab for reliable, high-traffic agents.

-

LangGraph Platform: Infrastructure for deploying and managing LangGraph agents at scale with one-click deployment and horizontal scaling. Generally available as of May 2025, it supports long-running, bursty workloads.

-

LangServe: A Python framework for deploying LangChain runnables and chains as APIs, making applications accessible to end-users. Simplifies moving from prototype to production.

-

LangChain Templates: Pre-built, customizable reference architectures for common tasks like RAG, chatbots, and content generation. Enables rapid prototyping and deployment.

-

Open Agent Platform: A no-code platform for building customizable agents, integrating with tools like LangConnect for RAG and other LangGraph agents. Aimed at non-developers to create AI solutions.

✨ Advanced Features

- Streaming

- Async chains

- Output Parsers

- Callbacks

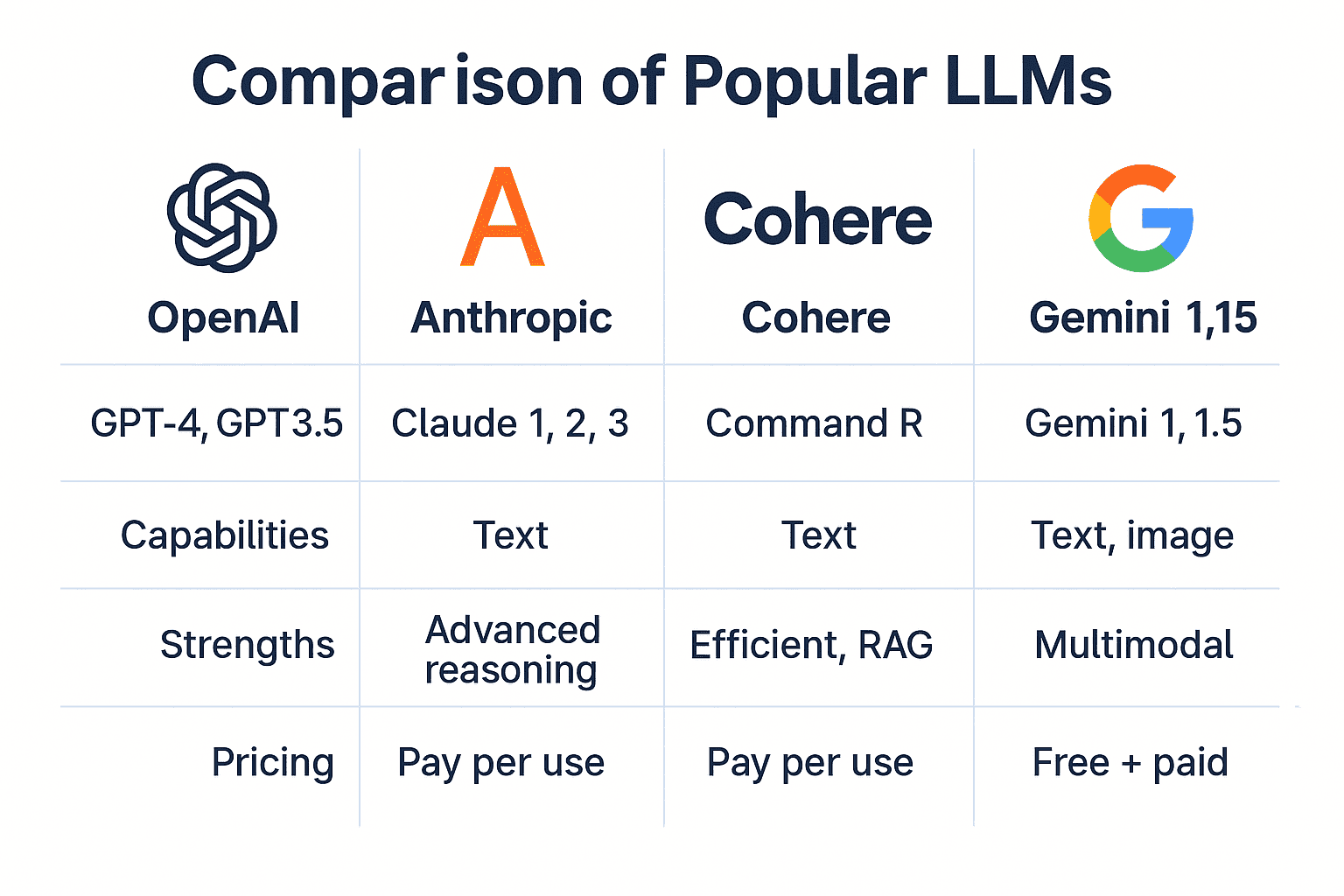

LangChain with Different LLMs

LangChain is model-agnostic and supports plug-and-play integration with a wide range of LLM providers—from OpenAI to local models via Ollama—giving you flexibility for experimentation, scaling, and deployment.

📊 When to Use Which LLM?

| Goal | Recommended LLM |

|---|---|

| Highest quality Q&A/chat | GPT-4 (OpenAI) |

| Budget-friendly applications | GPT-3.5 or Mistral |

| Long-context summarization | Claude 3 (Anthropic) |

| Full local/offline use | Ollama with Mistral/LLaMA GGUF |

| Advanced retrieval tasks | GPT-4 + RAG (Retrieval-Augmented) |

🧠 Memory & Agent Compatibility

Below is the compatibility for models with memory tool with LLMs

- All above models support agent workflows, memory, and prompt chaining in LangChain.

- Claude models require slightly different prompt formatting (e.g., no system message injection).

- Local models need context window management to avoid overloading.

🔄 Supported LLM Providers & Wrappers

Below are supported models for Langchain, and list is extending:

| Provider | LangChain Wrapper | Key Features |

|---|---|---|

| OpenAI | ChatOpenAI | GPT-4, GPT-3.5-turbo; best-in-class performance, streaming, system messages |

| Anthropic | ChatAnthropic | Claude 2, Claude 3; longer context windows, safety-first |

| Mistral AI | ChatMistralAI | Lightweight, fast open models (e.g., Mixtral); great for low-latency use |

ChatGooglePalm | Gemini support (via Vertex AI or LangChain integrations) | |

| Cohere | CohereLLM | Strong embeddings and multilingual models |

| Local LLMs | LLM or ChatOllama | Use GGUF/GGML models (e.g., Mistral, LLaMA 3) via Ollama or Hugging Face Transformers |

🔧 How to Configure an LLM in LangChain

Below is code to setup GPT-4 with Langchain:

import { ChatOpenAI } from 'langchain/chat_models/openai';

const llm = new ChatOpenAI({

temperature: 0.7,

modelName: 'gpt-4',

streaming: true,

openAIApiKey: process.env.OPENAI_API_KEY,

});✅ Tip: Set `streaming: true` to enable real-time responses in apps with UI or chat.