LangChain in Practice: Tools, Templates & Memory Walkthrough

Part 4 of LangChain Mastery

5/27/2025, Time spent: 0m 0s

In the previous module, we explored the core concepts of LangChain — what chains, agents, tools, and memory are and why they matter. Now it’s time to connect those pieces and walk through how to use them together to build a context-aware, multi-functional LLM application.

This module focuses on practical integration:

- 🧠 Prompt templates + LLM + memory = intelligent chatbot

- 🔧 Tools + chains = agents with real-world capabilities

- 📦 Document loaders + vector stores = RAG-powered search

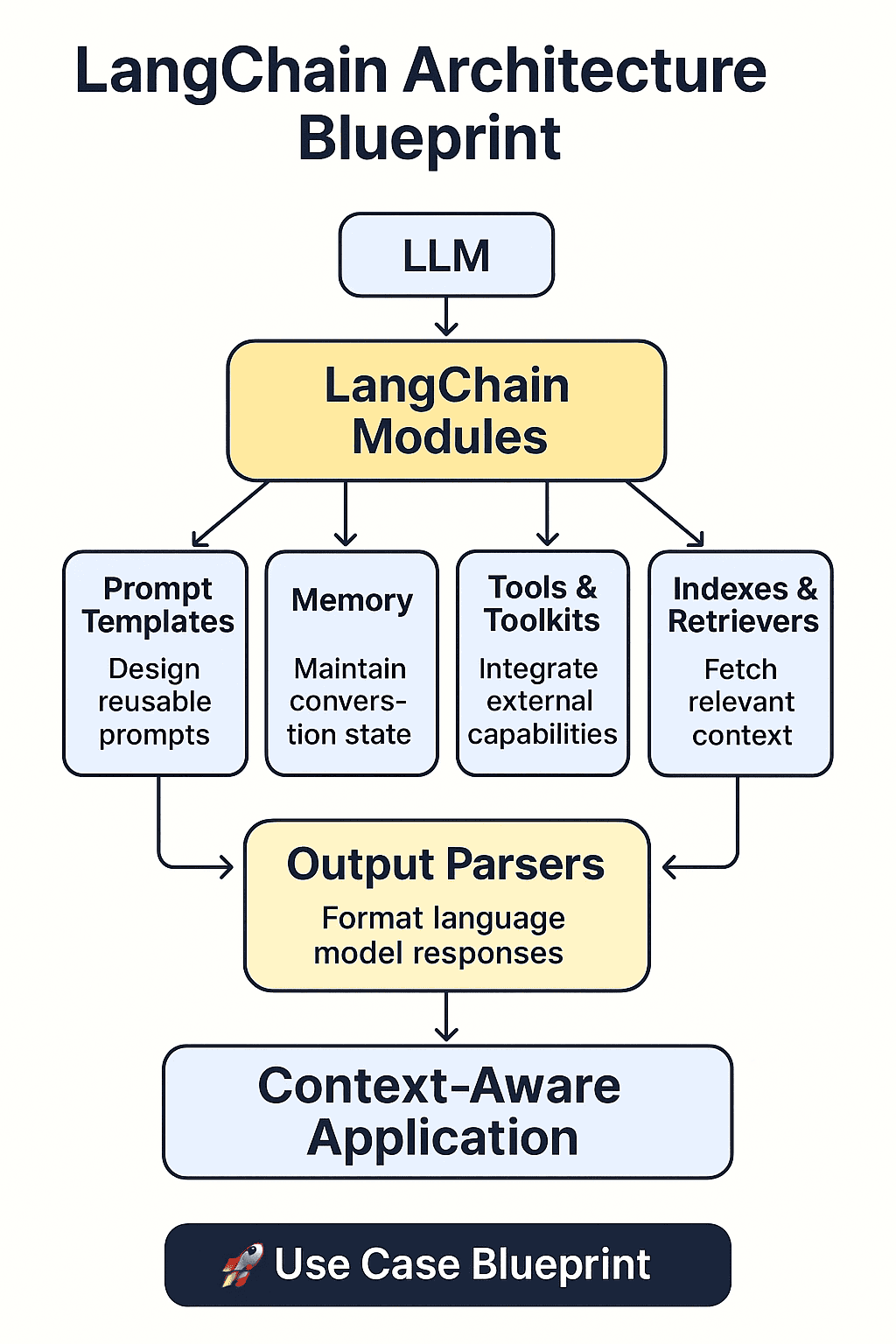

Below diagram depict Langchain component blueprint:

Each section below includes guidance, tips, and 🛠️ Try This blocks so you can build while you learn.

🧱 Prompt Templates

PromptTemplates let you design structured prompts with dynamic variables. Ideal for standardizing inputs to your LLMs.

🛠️ Try This: Create a prompt template that accepts a product_name and asks the LLM to generate a one-line elevator pitch.

PromptTemplate.from_template("Give me a one-line pitch for {product_name}")💡 Tip: Keep prompt templates modular and reusable across chains.

🧠 Memory

LangChain offers memory modules like ConversationBufferMemory, ConversationSummaryMemory, and EntityMemory.

🛠️ Try This: Use ConversationSummaryMemory for long chats to reduce token size while preserving context.

ConversationSummaryMemory(llm=ChatOpenAI()) ⚠️ Common Pitfall: Avoid unbounded memory in production—can lead to excessive cost or latency.

🔧 Tools & Toolkits

Tools extend your LLM’s capabilities. Combine them with Agents or use them in Chains.

🛠️ Try This: Load a calculator tool and let your agent solve “What is 5 * sqrt(49)?”

💡 Tip: Use LangChain’s built-in load_tools or define your own custom tools for APIs.

🔄 Chains

Chains help you sequence LLM calls and other logic. Common types include:

- LLMChain

- SequentialChain

- RouterChain

🛠️ Try This: Create a SequentialChain that extracts entities from text, then summarizes them.

⚠️ Gotcha: Ensure your intermediate chain outputs match the next chain’s expected inputs.

📄 Document Loaders & Vector Stores

Perfect for building document Q&A or semantic search bots.

🛠️ Try This: Load a PDF using PyPDFLoader, chunk it, embed using OpenAIEmbeddings, and store in FAISS.

💡 Tip: Use RecursiveCharacterTextSplitter for balanced token chunking.

🧾 Output Parsers

Output Parsers help you convert LLM text into structured data formats — useful for validation and interop.

🛠️ Try This: Use PydanticOutputParser to extract structured product info (name, price) from text.

⚠️ Best Practice: Always show format_instructions to the LLM as part of the prompt.

📚 Recap

This module focused on practical workflows using LangChain building blocks. Here’s what you’ve integrated:

- Templates + LLMs → Structured generation

- Memory + Chains → Conversational assistants

- Tools + Agents → Real-world automation

- Document loading + RAG → Knowledge bots

- Output Parsers → Structured API-like responses

In the next module, we’ll build complete apps using these integrated patterns.

➡️ Ready? Let’s Build a LangChain-Powered Assistant →