Hands-On with LangChain Prompt Templates

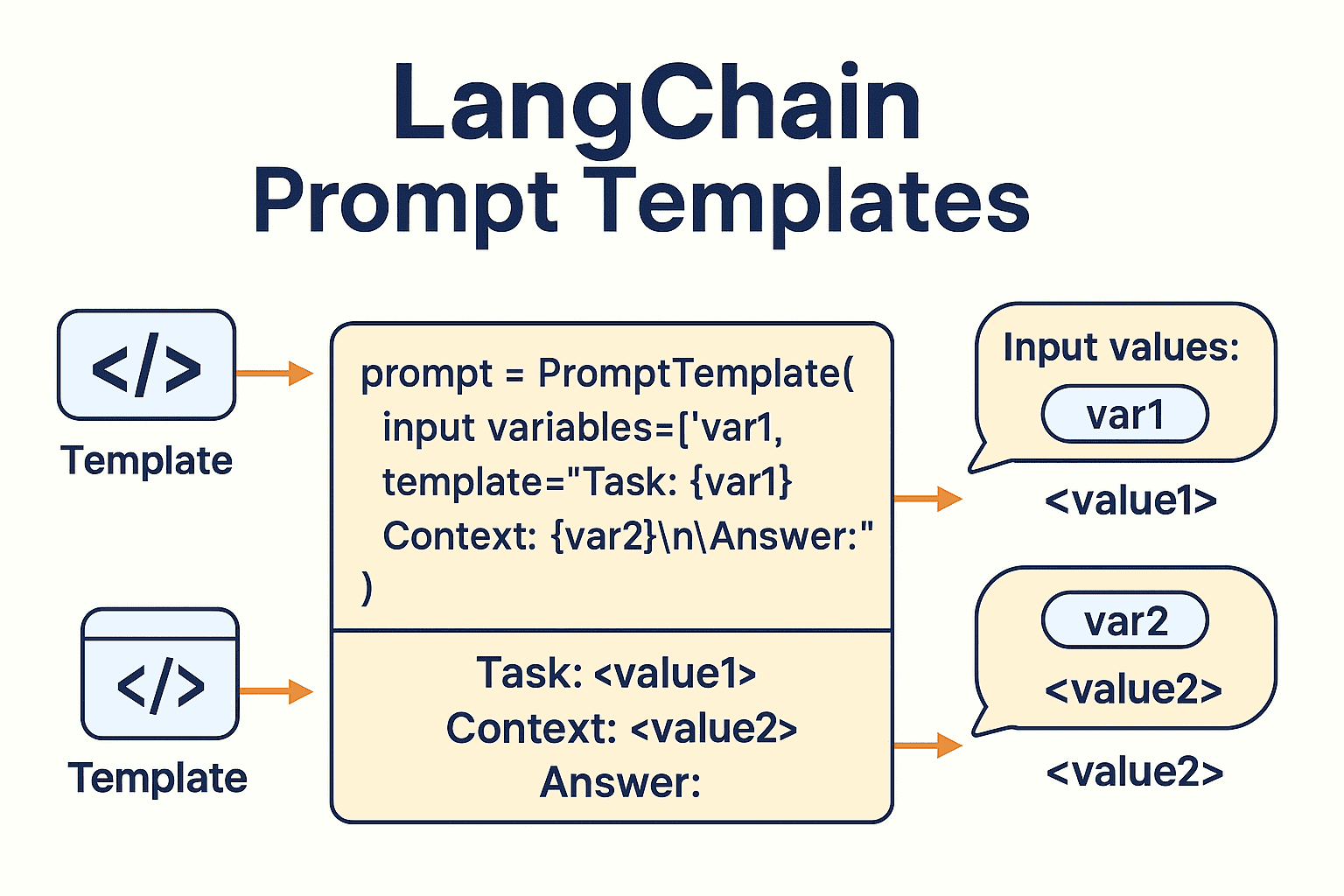

Prompt engineering is the beating heart of building effective LLM-powered apps. In this post, we’ll explore PromptTemplate from LangChain — a powerful abstraction that lets you structure reusable, parameterized prompts for various tasks like Q&A, summarization, and classification.

🔗 This post is part of our LangChain Mastery Series

📌 Module: LangChain Components → Prompt Templates

💡 Why Prompt Templates?

Prompt templates allow developers to:

- 🔁 Reuse prompts across different inputs

- 🧠 Maintain consistent instructions

- 🔒 Avoid prompt injection errors by controlling inputs

🧱 Core Syntax

from langchain.prompts import PromptTemplate

template = "Translate this sentence to French: {sentence}"

prompt = PromptTemplate(input_variables=["sentence"], template=template)

prompt.format(sentence="Hello, how are you?")✅ Output:

Translate this sentence to French: Hello, how are you?

You can plug this prompt into any LangChain chain, LLM model, or agent.

🔧 Building a Contextual Q&A Prompt

template = """

Use the context below to answer the user's question.

Context: {context}

Question: {question}

Answer:

"""

qa_prompt = PromptTemplate(

input_variables=["context", "question"],

template=template

)This format is useful for retrieval-augmented generation (RAG) pipelines.

🧪 Mini Exercises with Solutions

Sentiment Prompt

template = "Classify the sentiment of this review: {review_text}"

sentiment_prompt = PromptTemplate(

input_variables=["review_text"],

template=template

)Multi-Input Prompt

prompt = PromptTemplate(

input_variables=["context", "question"],

template="Context: {context}\nQuestion: {question}\nAnswer:"

)Best Practices

✅ Keep prompts short but instructive 🔄 Modularize for easy versioning and testing ⚠️ Avoid hardcoding variable values 📚 Document template structure and expected inputs

🚀 TL;DR

• PromptTemplate enables clean and reusable prompts.

• Accepts dynamic inputs using {var} syntax.

• Works seamlessly across LangChain chains and agents.

• Essential for building scalable LLM applications.🔗 What’s Next?

In the next component, we’ll explore Memory — how LangChain stores conversational history across interactions.

Want to see this integrated into a chatbot or tool? Drop a comment or follow along in the LangChain Series!

Enjoyed this post? Join our community for more insights and discussions!

👉 Share this article with your friends and colleagues 👉 Follow us on