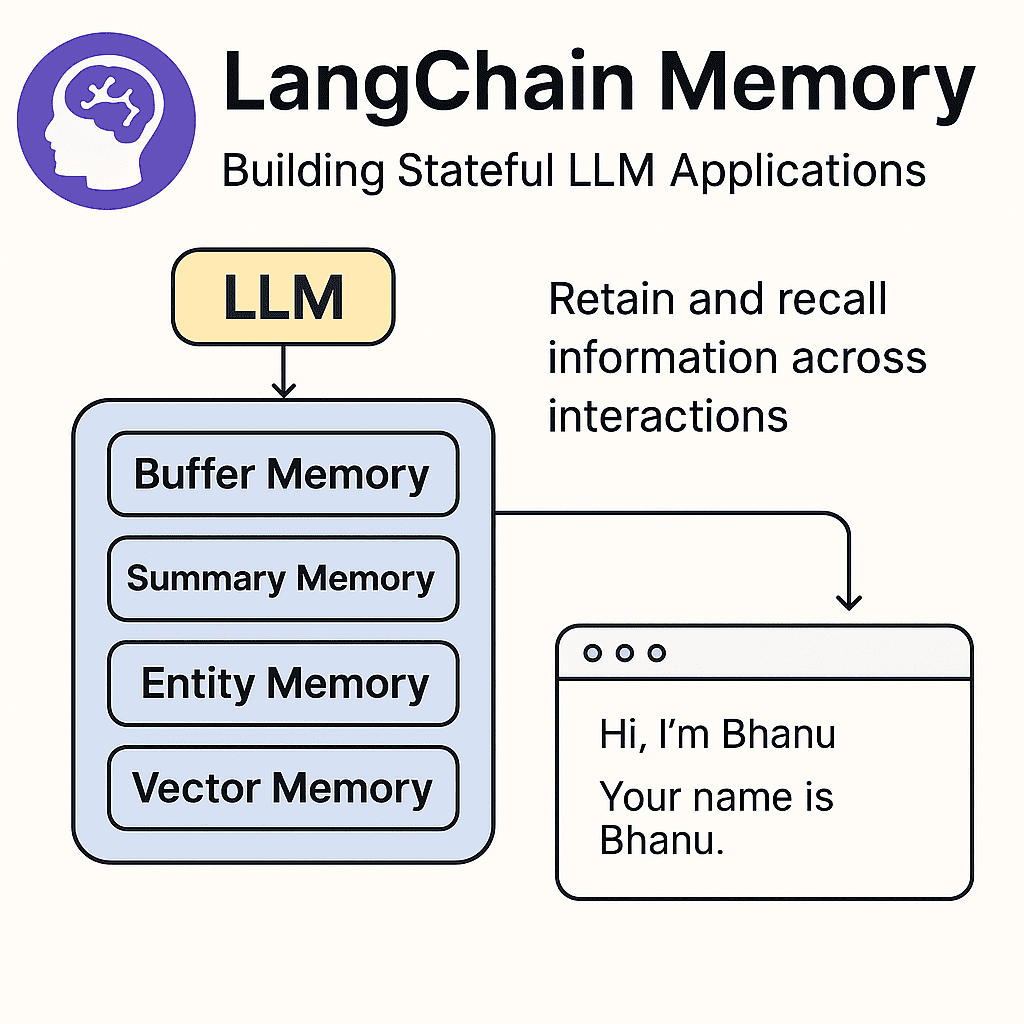

LangChain Memory: Building Stateful LLM Applications

by SuperML.dev,

Time spent: 0m 0s

Learn how to use LangChain Memory to retain and recall information across interactions — a vital feature for chatbots, virtual agents, and intelligent assistants.

✨ Why Memory Matters in LLM Apps

Large Language Models (LLMs) are stateless by default. Without memory, your assistant forgets everything after each prompt. LangChain solves this with powerful memory modules:

- 🗣️ Multi-turn conversations

- 🧠 Personalized interactions

- 🔄 Efficient context retention

🧩 Types of Memory in LangChain

| Memory Type | Purpose |

|---|---|

BufferMemory | Stores full conversation history as-is |

ConversationSummaryMemory | Summarizes past messages using an LLM for brevity |

EntityMemory | Tracks entities like names, topics, objects |

VectorStoreRetrieverMemory | Retrieves past data semantically using vector similarity |

Each memory type fits different needs — from long-term factual recall to short-term conversational continuity.

🛠️ Hands-On Example: BufferMemory

import { ConversationChain } from "langchain/chains";

import { ChatOpenAI } from "langchain/chat_models/openai";

import { BufferMemory } from "langchain/memory";

const memory = new BufferMemory();

const chain = new ConversationChain({ llm: new ChatOpenAI(), memory });

await chain.call({ input: "Hi, I'm Bhanu" });

await chain.call({ input: "What’s my name?" });Output: “Your name is Bhanu.”

The assistant remembers context across turns using memory.

When to Use Each Memory

- Use BufferMemory for raw dialogue retention

- Use ConversationSummaryMemory when saving tokens matters

- Use EntityMemory to build topic-aware agents

- Use VectorStoreRetrieverMemory in document Q&A bots

Memory can be attached to Chains, Agents, or even Tools.

Related Tutorials

🔗 Hands-On: LangChain Prompt Templates

Enjoyed this post? Join our community for more insights and discussions!

👉 Share this article with your friends and colleagues 👉 Follow us on