LangChain Chains: Building Workflows with LLMs

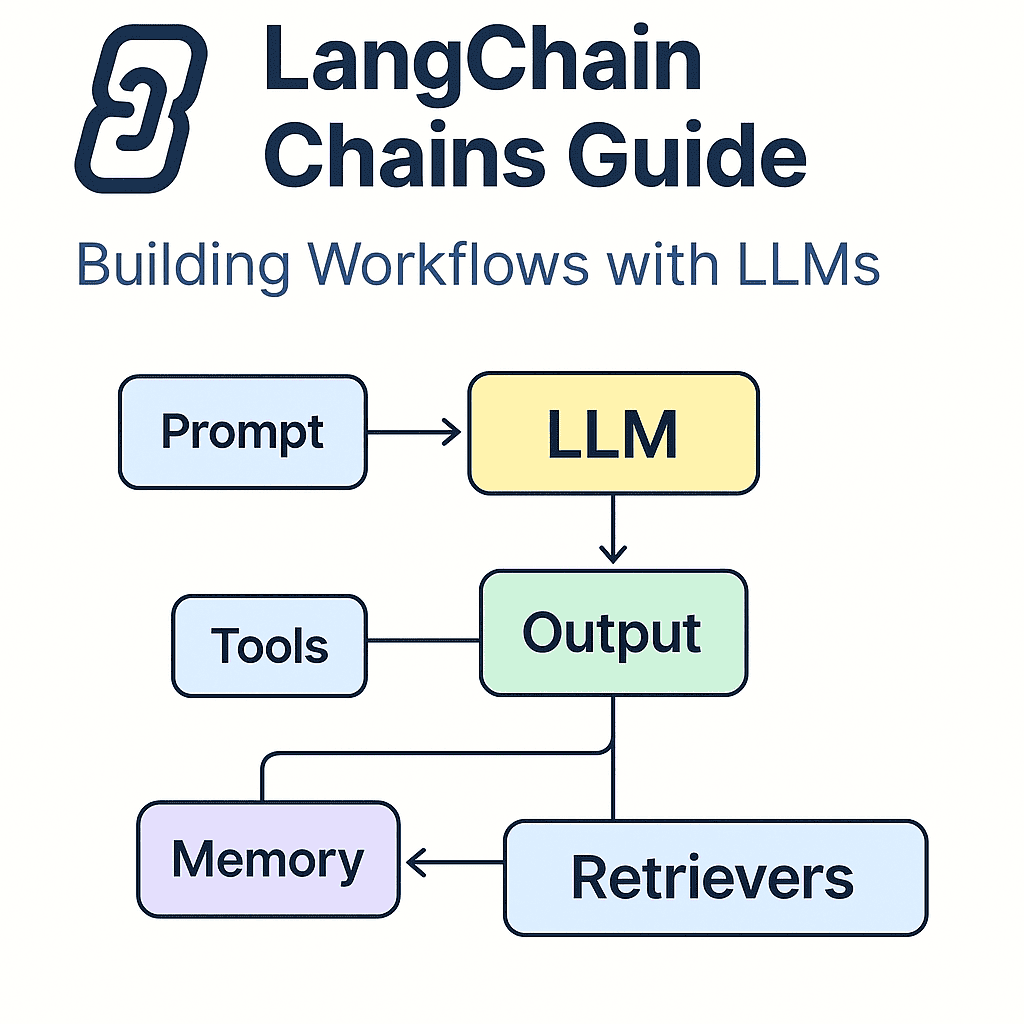

Chains are the backbone of LangChain. They allow you to compose LLM calls, tools, and memory into powerful pipelines for building real-world AI apps.

Whether you’re building a Q&A assistant, a multi-turn chatbot, or an agent that performs web searches, Chains are how you glue it all together.

⚙️ What is a Chain in LangChain?

A Chain is a sequence of steps — each powered by an LLM, a tool, or a retriever — that takes input, processes it, and returns structured output.

Common use cases:

- Prompt → LLM → Output (e.g., LLMChain)

- Question → Retriever → Context → LLM → Answer

- Input → Memory → Prompt → LLM → Response

LangChain provides built-in types like:

- LLMChain

- SequentialChain

- SimpleSequentialChain

- RouterChain

🛠️ Example: Basic LLMChain

You can build simple one-shot pipelines where the LLM transforms a single prompt into output, such as translation or explanation.

from langchain.llms import OpenAI

from langchain.prompts import PromptTemplate

from langchain.chains import LLMChain

prompt = PromptTemplate.from_template("Translate the following to French: {text}")

llm = OpenAI(temperature=0)

chain = LLMChain(llm=llm, prompt=prompt)

print(chain.run("Hello, how are you?"))Output: “Bonjour, comment ça va ?”

🔁 SequentialChain

Use this when one step’s output is the next step’s input.

from langchain.chains import SequentialChain

chain = SequentialChain(

chains=[chain1, chain2],

input_variables=["input"],

output_variables=["result"]

)Great for multi-step generation flows like: extract → summarize → translate.

🔀 RouterChain (Dynamic Routing)

RouterChain helps you direct different kinds of user inputs to the right pipeline.

Example:

classify user input type and route to a domain-specific LLM chain like code, finance, or general query.

🧠 Chains with Memory

Chains become stateful when combined with LangChain’s memory modules.

from langchain.chains import ConversationChain

from langchain.memory import ConversationBufferMemory

memory = ConversationBufferMemory()

chain = ConversationChain(llm=llm, memory=memory)This allows your assistant to remember previous interactions, making the conversation feel more intelligent and coherent.

🧱 Real-World Use Cases

- Chatbots with memory

- Dynamic interview assistants

- Product recommendation flows

- Retrieval-based Q&A (RAG)

🔗 Related Resources

🚀 TL;DR

- Chains let you structure complex LLM workflows

- Use LLMChain, SequentialChain, RouterChain depending on your logic

- Combine with memory and retrievers for more contextual intelligence

Chains are the glue that brings LangChain modules together into production-grade apps.

📍 Next up: Agents & Tools!

Enjoyed this post? Join our community for more insights and discussions!

👉 Share this article with your friends and colleagues 👉 Follow us on