How to Build an Agentic AI Framework in 2025: Solving Gaps with Robust Guardrails

Imagine this: You have an AI system that checks your email, negotiates with vendors, schedules your meetings, and recommends stocks to buy—autonomously. Welcome to the world of Agentic AI. I have high hopes that this post will motivate you to build your own Agentic AI framework in 2025 — tailored, modular, and ready to power real business cases.

Not only will you learn how to build it, but also why existing frameworks like AutoGen, LangChain, and LangGraph fall short in key areas like guardrails and misuse prevention.

🌐 The Rise of Agentic AI

Agentic AI refers to systems built from autonomous AI agents—entities that perceive, reason, plan, act, and often collaborate to achieve goals.

As of 2025, we’re witnessing:

- Multi-agent ecosystems managing workflows

- LLMs making decisions using external tools

- Business processes being partially automated if not fully autonomous

- Agents that can learn from feedback and adapt over time

“Imagine creating an AI that plans your day, negotiates deals, or manages your projects. Here’s how to start.”

🧩 What is an Agentic AI Framework?

An Agentic AI Framework is a structured environment that enables the development, deployment, and management of autonomous AI agents. It provides the necessary tools, libraries, and APIs to create agents that can perceive their environment, reason about it, plan actions, and execute tasks. This framework typically includes:

- Agent Design Patterns: Templates for common agent behaviors (e.g., perception, planning, action)

- Tool Integration: APIs and libraries to connect agents with external systems (e.g., databases, web services)

- Memory Management: Mechanisms to store and retrieve information across agent sessions

- Ethical Guardrails: Built-in checks to ensure agents operate within safe and ethical boundaries

- Monitoring and Logging: Tools to track agent performance, decisions, and interactions for auditing and improvement

Top Agentic AI Frameworks: Capabilities and How They Work

Let’s review three leading frameworks to understand their strengths:

Microsoft AutoGen

- Capabilities: Supports multi-agent conversations, integrates with LLMs (e.g., OpenAI, Azure), and offers secure code execution via DockerExecutor. Ideal for IT automation.

- How It Works: Agents collaborate via conversational patterns, e.g., diagnosing server issues by coordinating tasks.

LangChain

- Capabilities: Excels in single-agent workflows, memory management, and tool integration (e.g., APIs). Used for chatbots in finance or customer service.

- How It Works: Chains tasks with LangChain Expression Language (LCEL), using LangSmith for observability.

LangGraph

- Capabilities: Enables graph-based, stateful multi-agent workflows with human oversight. Suited for sequential tasks like medical diagnostics.

- How It Works: Tasks are modeled as nodes and edges for precise control.

Chart: Comparing the strengths of AutoGen, LangChain, and LangGraph.

Why not Existing Frameworks

These frameworks have notable limitations:

| Framework | Key Drawbacks |

|---|---|

| AutoGen | Steep learning curve, no no-code interface, less structured workflows, limited integrations. |

| LangChain | Complex abstractions, poor backward compatibility, LangSmith dependency, weak multi-agent support. |

| LangGraph | Complex graph setup, no native secure code execution, ecosystem lock-in, limited parallel processing. |

Critical Gap: None offer robust, built-in guardrails for ethical behavior or misuse prevention, leaving developers to implement custom solutions, which can be inconsistent.

🚀 Why Now? The Need for a New Framework

The current landscape of Agentic AI frameworks has significant gaps:

| Framework | Key Features | Limitations |

|---|---|---|

| AutoGen | Multi-agent conversations, secure code execution, LLM integration | Steep learning curve, no no-code interface, less structured workflows |

| LangChain | Single-agent workflows, memory management, tool integration | Complex abstractions, poor backward compatibility, weak multi-agent support |

| LangGraph | Graph-based, stateful multi-agent workflows, human oversight | Complex graph setup, no native secure code execution, limited parallel processing |

These frameworks excel in specific areas but lack:

- Robust guardrails to prevent misuse

- Ethical decision-making to ensure safe behavior

- No-code interfaces for non-technical users

- Modular design for easy customization

- Scalability for complex, real-world applications

Are you convinced?

Building a new Agentic AI framework addresses these gaps and provides significant benefits:

- ✅ Tailor agents to your domain (finance, healthcare, support)

- 💸 Avoid SaaS costs and vendor lock-in

- 🧠 Learn and experiment with cutting-edge AI workflows

- 🔒 Implement built-in guardrails to prevent misuse

- 📈 Scale from simple tasks to complex multi-agent systems

- 🛠️ Build a modular architecture that can evolve with your needs

- 🌍 Contribute to the open-source Agentic AI ecosystem

Key Core Components to focus on

If you are conviced that a new Agentic AI framework will be helpful, Very next step is to understand its core components. To build a robust Agentic AI framework, you need to understand the core components that enable agents to function effectively. Here’s a breakdown of the essential components:

| Component | Role |

|---|---|

| User Intent | Interpret the user’s goal (e.g., buy stock, write email) |

| Perception | Convert raw input (text, images, voice) to structured meaning |

| Reasoning | Infer implications, prioritize actions, understand context |

| Planning | Sequence multi-step actions to achieve the user’s goal |

| Action | Execute external tasks: API calls, transactions, file updates |

| Memory | Retain short/long-term knowledge for context-aware behavior |

| Ethics | Apply business rules, safety checks, or compliance boundaries |

| Continuous Learning | Adapt behavior based on feedback or long-term performance metrics |

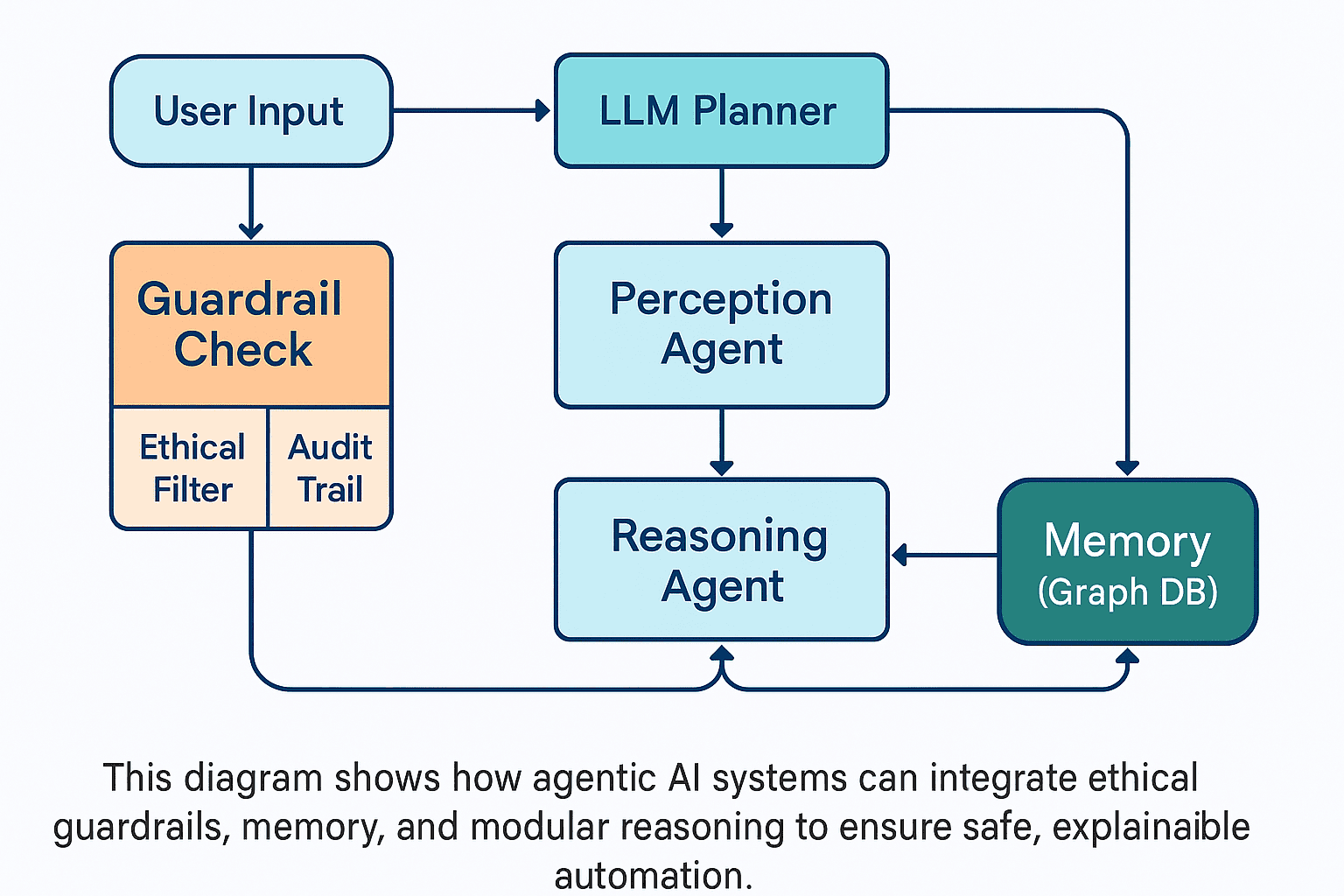

Guardrailing and Misuse Prevention: A Core Feature

To ensure safety and trust, a new framework must prioritize guardrails to prevent misuse. Here’s how:

- Ethical Decision-Making Layer: Embed a module to evaluate actions against ethical principles (e.g., fairness, transparency). Use rule-based filters or a fine-tuned LLM to flag biased or harmful outputs.

- Example: An agent generating customer responses checks for biased language before replying.

- Input Validation and Sanitization: Prevent malicious inputs (e.g., prompt injection attacks) by sanitizing user queries and limiting agent capabilities to predefined scopes.

- Example: Restrict a healthcare agent from accessing non-medical APIs.

- Audit Trails and Explainability: Log all agent actions with traceable reasoning (e.g., using a vector database) to enable audits and ensure transparency.

- Example: A financial agent logs why it recommended a stock for regulatory compliance.

- Runtime Monitoring: Implement real-time monitoring to detect anomalies (e.g., unexpected API calls) and halt agents if misuse is suspected.

- Example: Stop an IoT agent if it attempts unauthorized device access.

- User-Defined Constraints: Allow users to set boundaries (e.g., “don’t share personal data”) via a no-code interface, ensuring flexibility and safety.

- Example: A smart home agent respects user privacy settings, avoiding external data sharing.

Why It Matters: Built-in guardrails reduce developer burden, ensure compliance (e.g., GDPR, HIPAA), and prevent misuse, like agents being manipulated to leak data or act harmfully.

Chart: Workflow of a new Agentic AI framework with guardrails and memory.

🧠 Decision Engine: LLM or Reinforcement Learning?

One of the biggest architectural questions is: What powers the decision-making?

-

Use LLMs (e.g., Claude, GPT-4, Mistral) when you need:

- Reasoning from open-ended, vague input

- Language-rich tasks (summarizing, planning, parsing intent)

- One-shot decisions with limited feedback

-

Use Reinforcement Learning (RL) when:

- You need agents to optimize for long-term goals (e.g., maximizing ROI)

- There’s a clear reward function and feedback loop

- You want autonomous fine-tuning over time

Pro Tip: Start with LLM-driven reasoning, then layer RL for optimization loops.

🛠️ Building Blocks of an Agentic AI Framework

To build your Agentic AI framework, you need to combine several technologies and design patterns. Here’s a high-level architecture:

flowchart TD

A[User Input] --> B[Perception Agent]

B --> C[Planning Agent]

C --> D[Action Agents]

D --> E[Tool APIs]

E --> F[Memory System]

F --> G[Ethics Guardrails]

G --> H[Feedback Loop]

H --> CDiagram: High-level architecture of an Agentic AI framework with perception, planning, action, memory, ethics, and feedback loops.

🛠️ Step-by-Step: Building Your Agentic Framework

Step 1: Define the Use Case

Pick a clear scenario:

- Ex: “Automate my meeting scheduling”

- Roles:

PerceptionAgent,PlannerAgent,EmailAgent

Step 2: Pick Your Stack

- LLM: Claude 3, GPT-4, Mistral, Grok (via x.ai)

- LangChain / LangGraph for logic

- Python + FastAPI for control APIs

- Vector DBs: FAISS, Chroma, Pinecone

- Memory: Redis or Neo4j (graph memory)

Step 3: Modular Agent Design

Split into services:

PerceptionAgent: Parses user inputsPlannerAgent: Calls LLM to decide which tools/steps to executeActionAgents: Tools likeSendEmailTool,StockTool,TradeExecutor

Step 4: Add Memory and Ethics

- Store conversation summaries or facts

- Add rules: “Don’t buy stocks if RSI > 70”, “Don’t schedule meetings after 6pm”

Step 5: Test & Deploy

- Simulate agent tasks locally (Streamlit/Gradio UI)

- Add logging, fallback prompts, and LangSmith for tracing

- Deploy on cloud (e.g., Render, Fly.io, Hugging Face Spaces)

📉 Visualizing the Architecture

🧩 Common Challenges & Solutions

| Challenge | Solution |

|---|---|

| Multi-agent collaboration | Use LangGraph or CrewAI with FIPA-inspired coordination |

| Ethical risks | Implement rule-based filters + manual approval workflows |

| Tool routing gone wrong | Add better tool descriptions or enforce role-based tool access |

| High cost of LLMs | Use local LLaMA/Mistral models with Ollama |

| Agents not learning/improving | Add feedback loops + continuous learning or fine-tuning mechanisms |

🔮 The Future of Agentic AI

The future of Agentic AI is bright, with exciting possibilities:

- Autonomous agents that can negotiate, plan, and execute complex tasks

- Agents that can learn from user feedback and adapt over time

- Agents that can collaborate with each other to solve complex problems

- Agents that can operate in real-time, integrating with IoT devices and smart environments

- Agents that can understand and process multimodal inputs (text, voice, images)

- Agents that can operate in a decentralized manner, leveraging blockchain for trust and security

- Agents that can self-correct and improve their performance through reinforcement learning

- Agents that can operate in a fully autonomous manner, requiring minimal human intervention

🚀 The Road Ahead: Building Your Agentic AI Framework

The future of Agentic AI is not just about building agents, but creating a framework that allows you to easily develop, deploy, and manage these agents. Here are some exciting directions to explore:

- Modular agent design patterns for easy customization

- No-code interfaces for non-technical users to create agents

- Robust guardrails to prevent misuse and ensure ethical behavior

- Integration with existing tools and APIs for seamless workflows

- Scalable architectures that can handle complex, multi-agent systems

- Real-time monitoring and feedback loops for continuous improvement

- Open-source contributions to build a community around Agentic AI

- Decentralized agent networks for trust and security

- Agents that can operate in real-time, integrating with IoT devices and smart environments

- Agents that can understand and process multimodal inputs (text, voice, images)

- Agents that can self-correct and improve their performance through reinforcement learning

- Agents that can operate in a fully autonomous manner, requiring minimal human intervention

🏗️ Building Your First Agentic AI Framework

Ready to build your own Agentic AI framework? Here’s a quick roadmap:

- Define Your Use Case: Start with a specific problem (e.g., scheduling meetings, managing emails).

- Choose Your Stack: Pick LLMs, frameworks (LangChain, LangGraph), and tools (Redis, FastAPI).

- Design Modular Agents: Create agents for perception, planning, action, and memory.

- Implement Guardrails: Add ethical decision-making, input validation, and monitoring.

- Test and Iterate: Simulate agent tasks, add logging, and refine based on feedback.

- Deploy: Use cloud platforms (Render, Fly.io) for hosting and scaling.

- Share and Collaborate: Open-source your framework, share on GitHub, and engage with the community.

- Scale: Start with simple agents, then build complex multi-agent systems.

- Contribute to the Ecosystem: Share your learnings, improvements, and innovations with the community.

- Stay Updated: Follow advancements in Agentic AI, LLMs, and ethical AI practices.

🏁 Conclusion

Building a new Agentic AI framework in 2025 is not just an opportunity—it’s a necessity. With the right tools, design patterns, and ethical guardrails, you can create a powerful system that empowers autonomous agents to solve real-world problems.

- Start building today: The future of Agentic AI is in your hands. Whether you’re a solo developer or part of a team, the time to innovate is now.

- Remember: Agentic AI is not just about automation; it’s about creating intelligent systems that can learn, adapt, and operate ethically in our complex world.

Pro Tip: Start with a simple agent that manages a single task, like scheduling meetings. Then, gradually expand its capabilities to handle more complex workflows and interactions.

Example: Start with a calendar agent that checks your availability, then add features to negotiate meeting times with others, and finally integrate it with your email and task management systems.

Remember: Agentic AI is not just about automation; it’s about creating intelligent systems that can learn, adapt, and operate ethically in our complex world.

📢 Call to Action

Got an Agentic AI idea? Built a mini framework?

Let’s build the future—agent by agent.

Enjoyed this post? Join our community for more insights and discussions!

👉 Share this article with your friends and colleagues 👉 Follow us on